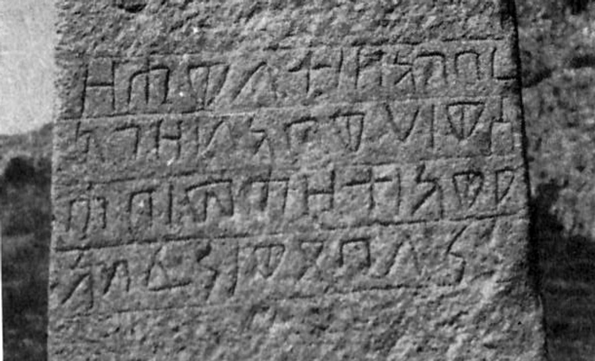

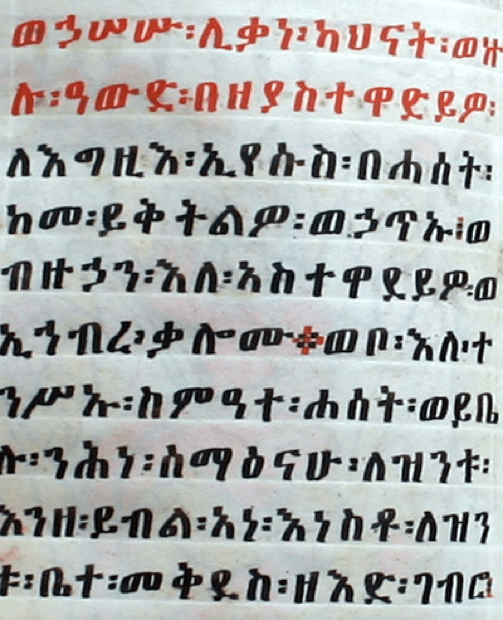

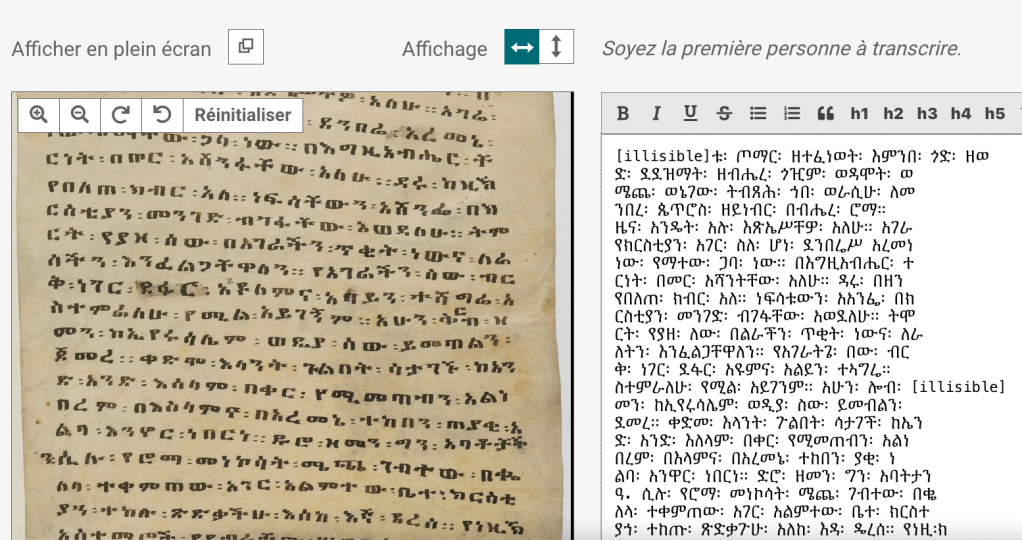

The script used by the Christian cultures of Ethiopia is ancient, developing from the Sabaean script in the first centuries of the common era. It is alpha-syllabic, meaning that each basic sign is a syllable that can be modified according to seven consonant sounds. A drawing is better than an explanation:

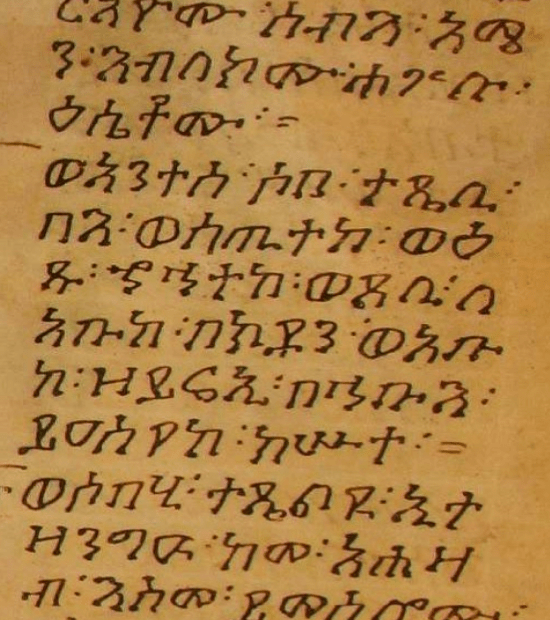

There are more than 300 signs in all, including punctuation and numbers. An advantage of this script for automatic recognition processing is that this writing system has remained very stable over the centuries, at least for the signs that have been used since ancient times to transcribe the ancient language, Ge’ez. There are no abbreviations, almost no ligatures, and anyone who can read a newspaper today can read a fifteenth century manuscript.

The vernacular languages, particularly Amharic and Tigriña, have added extra signs to record sounds specific to these languages. Some of these signs have had several spellings over the centuries, until they were standardised in the twentieth century. Thanks in particular to the efforts of Daniel Yacob, all of them are now available in unicode.

In the twenty-first century, the increase in the number of digitised Ethiopian manuscripts has led to a proliferation of electronic editions of texts and the need to acquire large numbers of texts electronically in the field of Ethiopian studies as in all academic fields.

The purpose of this post is to briefly present four research projects that have recently worked on the automatic recognition of Ethiopian handwriting, each with different objectives and methods. Indeed, HTR (Handwritten Text Recognition) cannot be considered in isolation from the research projects that motivate it.

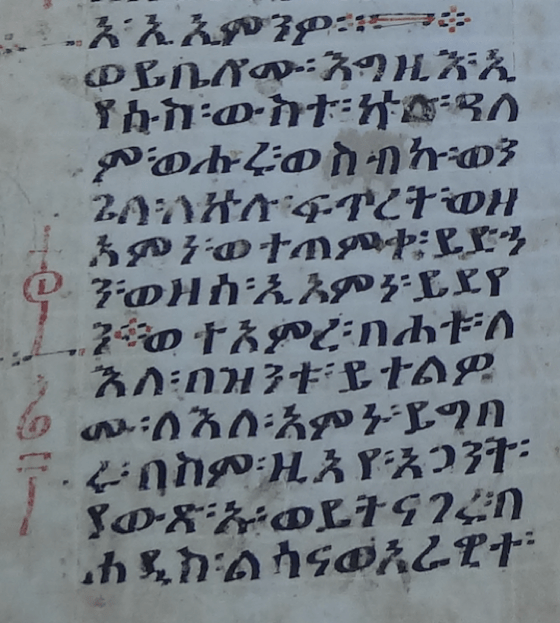

Firstly, Beta Masaheft (BM), a long-term project from Hamburg University creating a digital environment for the study of Ethiopian manuscript culture, has chosen to use Transkribus. The advantages are, of course, that the tool has already been developed and tested and is available, that it is solidly maintained and that it is growing in power due to its very large number of users. A dataset of 50,000 words, taken from fewer than a dozen very different manuscripts, forms the ground truth.

The challenge, described in a 2022 article by Hizkiel Mitiku Alemayu, is not only to save time on transcription, but also to prepare an export that can be easily reintegrated into BM’s TEI schema, as well as taking into account the layout of the manuscript folio.

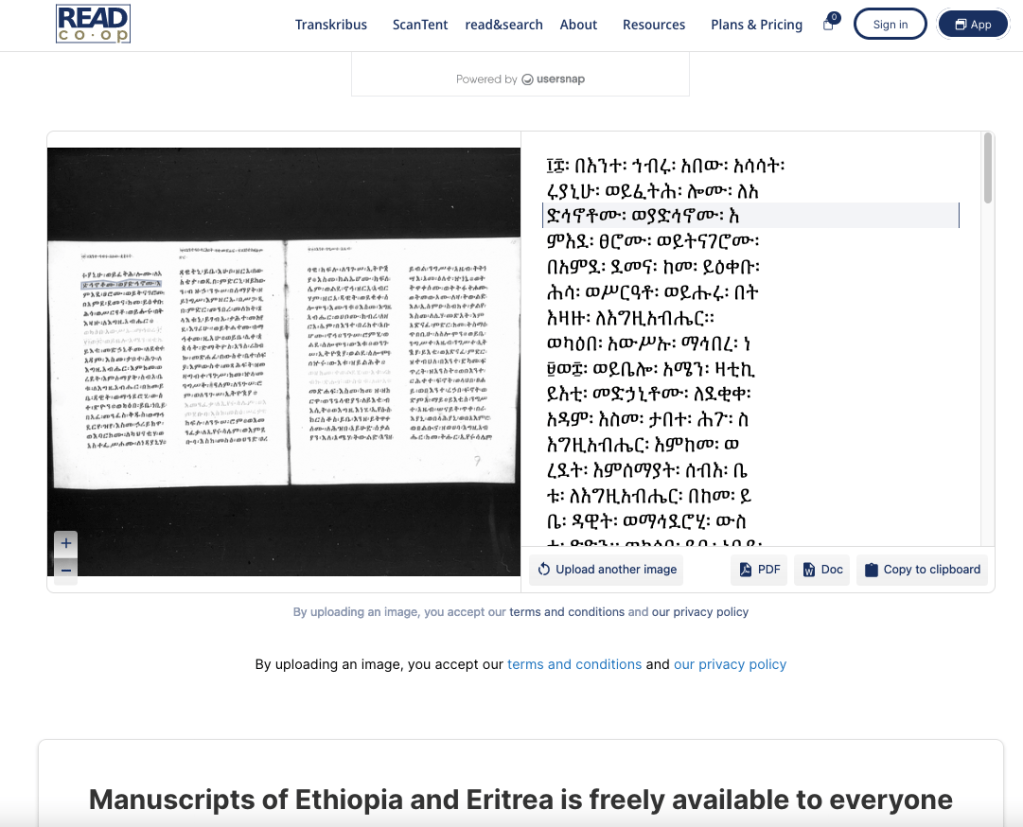

A small tool on the Transkribus website lets you use this model to obtain transcriptions immediately, free of charge and online, from images of Ethiopian manuscripts of your choice. The result is really very good! The template, called “Manuscripts of Ethiopia and Eritrea” is also available on the Transkribus site (Client and Lite).

The second project: a team from the University of Toronto and the University of Notre Dame, supported by a grant from the Mellon Foundation, has developed an open-source tool that is free of charge, very light in terms of digital resources so that it can run almost in low-tech mode and without an Internet connection on a personal computer. Relying on Transkribus is a strategy that they are questioning because it is not free of charge when carrying out mass HTR, as well as because of the need to have a good, stable internet connection, and finally because uploading data can pose ethical problems. Indeed, many photographs of Ethiopian manuscripts have been taken without any such use having been planned by the owners of the manuscripts, whether religious institutions in Ethiopia or libraries. An article by 12 co-authors presents in detail the methodology chosen for establishing the datasets and the ground truth, with the particularity of having also used artificially fabricated datasets in order to take into account rare graphemes or spellings. The technical aspects are detailed (and this is where I reach my level of incompetence!). A comparison of the results obtained with the BM model in Transkribus seems to be to the advantage of the tool developed by Toronto (but since I read this article by Alix Chagué, I’ve become suspicious of results based solely on the CER [Character Error Rate]!)

The dataset and model are not shared – unless I’m mistaken – but the tool created is accessible online and can be downloaded from the GitHub of Samuel Grieggs for installation on a personal computer. In its current version, it is only possible to transcribe line by line. It means that each image of a folio of a manuscript has to be sliced to separate lines and columns. It makes the work of preparing the data a little tedious, but a built-in tool should be added to solve this problem.

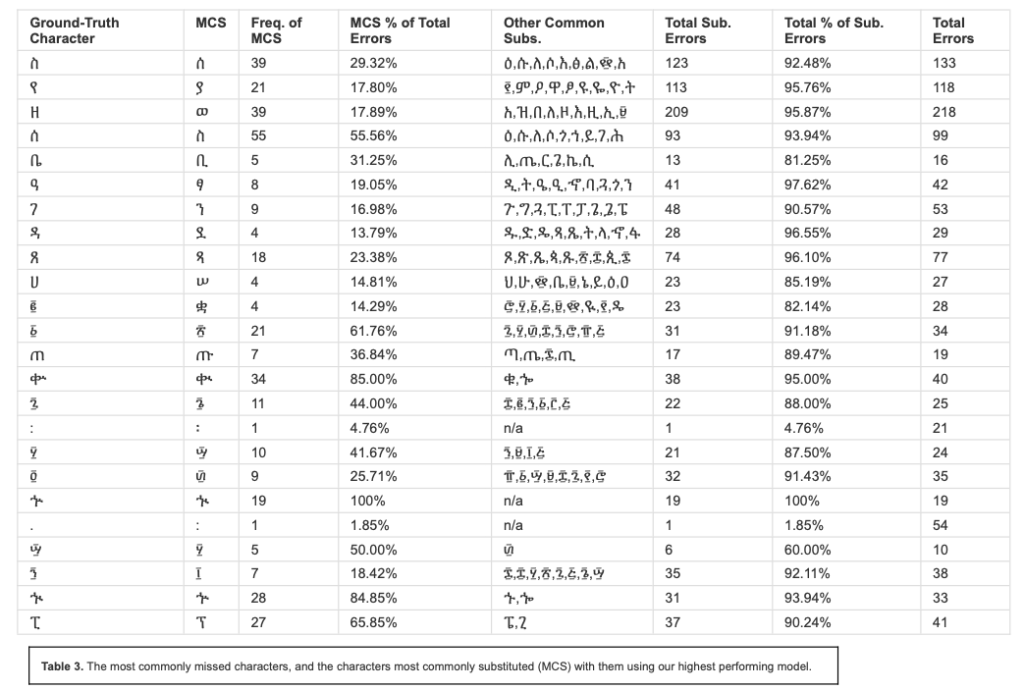

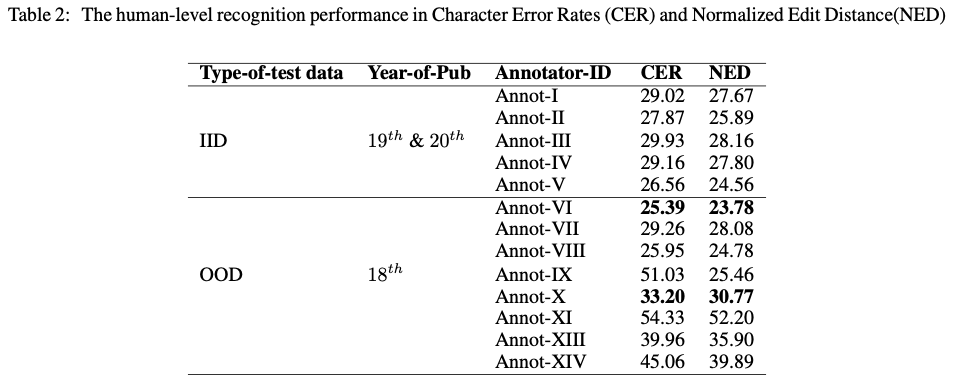

The third project is led by Birhanu Hailu Belay, from the University of Bahir Dar. It is presented in an article signed by 6 other co-authors, including Isabelle Guyon from INRIA, with funding from the ANR and the European Union, as well as Google Brain. This young researcher has been working for several years on the recognition of Ethiopian syllabics, and this article marks a milestone in his long-term project. His aim is to design HTR tools at the lowest technological cost, and his motto is, as he says on his ResearchGate page, “Democratization of AI (Low resource AI )“. One of the special features of this study is that it compares the results of several types of HTR with those of a transcription carried out by human beings. The conclusion is that AI produces better results! So there’s no point in spending time developing automatic handwriting recognition systems! Several HTR methods are then compared, with the conclusion that CTC (Connectionist Temporal Classification) is the one that provides the best results at the lowest technological cost.

The datasets are stored in warehouses (Zenodo and HugginFace) and are freely downloadable, and the model is available and can be tested (see the GitHub of the project HDD-Ethiopic). In passing, I’d like to take this opportunity to mention the HTR United initiative, which brings datasets and models together.

Finally, the fourth and final project is the one I have undertaken with the company Calfa, for the project of transcribing and publishing the thousands of pages of the notebooks of the traveller and scholar Antoine d’Abbadie, present in Ethiopia in the middle of the nineteenth century (see my previous posts here and here). These multilingual, multi-script notebooks are written in Latin script with a large number of idiosyncratic diacritics; in Ethiopian alpha-syllabics; and also in Arabic, Hebrew, and Greek. In addition, the page layout is particularly complex due to the presence of numerous lists, additions in the margins, and, as a general rule, the use of all the space on the pages.

After testing Transkribus and E-scriptorium (in 2021), we have decided to delegate this time-consuming work to a service provider. Faced with the difficulty posed by the multiplicity of scripts, Calfa developed three character recognition models: Latin, Ethiopian and Latin-Ethiopian. One of the three was used based on automatic detection of the type of writing that predominates on a page. The result is very good, even if it requires a great deal of rereading. The automatic recognition of Ethiopian script, written with great care by Antoine d’Abbadie, is far superior to that of Latin script, which is very cursive and scribbled.

This presentation of four recent HTR projects on the Ethiopian alpha-syllabary shows once again that the scientific environment and research objectives determine the choice of methods and technical tools.

2 thoughts on “Current approaches on Automatic Recognition of Ethiopic script”